Bike Share Analysis using Python and Power BI (Part 1)

This is my first project written in the form of an article through the medium. This project is a task that I completed after completing the google data analytics certificate program. I hope that by completing this project I can improve and sharpen my analysis. I will divide this article into two parts where the first part focuses on discussing data cleaning and transformation of the data while the second part is the core of the topic, namely the results of the analysis.

About company

In 2016, Cyclistic launched a successful bike-sharing offering. Bikes can be accessed from one station and returned to another at any time in the system. Customers who purchase a one-way ticket or a full day ticket are referred to as casual riders. Customers who purchase an annual membership are Cyclistic members. Cyclistic financial analysts have concluded that annual members are much more profitable than regular riders. While pricing flexibility is helping Cyclistic attract more customers, directur of marketing believes that maximizing the number of annual members will be the key to future growth. Directur of marketing has set a clear goal: Designing a marketing strategy aimed at converting regular riders into annual members.

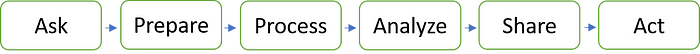

Methodology

methodology or data analysis process phase in the early to late stages I use the sequence that I have learned after completing the course google data analytics.

1. Ask

as the marketing director asked, before deciding what kind of campaign to do, a data analyst is given the task of looking at a trend so that the campaign runs on target. The goal of this analysis is Designing a marketing strategy at converting regular riders into annual members. The first thing that comes to mind after understanding the purpose and columns of the entire data:

1. The comparison of the last year is the number of annual members vs regular members

2. Total trips and average trips based on the type of bike used

3. Total trips and average trips by hour

4. Total trips and average trips by day

5. Total trips from and to station by user type

2. Prepare

Data can be download from here from this link. Data is stored in csv format files, in this analysis the period taken is one full year of data starting from October 2021 to September 2022. There 13 columns: ride_id, rideable_type, started_at, ended_at, start_station_name, start_station_id, end_station_name, end_station_id, start_lat, start_lng, nd_lat, end_lng, member_casual. The type of rider is recorded in one of the areas within the information set. casual riders pay for person or day by day rides, whereas part riders pay for yearly memberships. This information is required to identify the contrasts within the way the two bunches utilize the bicycle share program.

3. Process

Process phase is used to find and eliminate errors and inaccuracies that can affect the results of the analysis (data cleaning). data cleaning includes adjusting data formats, combining some additional useful data to support analysis results, eliminating outliers, etc. the tool i use in data cleaning is python programming language. This language is very powerful with its pandas support library which can manipulate dataframes.

We know pandas is a library for manipulating data, I use pyplot myself for visualization, and glob to find word patterns. Because the files are downloaded separately (monthly) we need to merge using the concat function which will be stored in one file, in the code above the results of the merger are stored in the df variable. To find the number of rows and columns use the df.shape method, while to display the first 5 data use the df.head() method. df.info() is an alternative to find out information in a table such as the amount of data, column names, and data types in each column.

isnull() or isna() methods are used to check and manage NULL values in a data frame, but if you want to be specific to know how many rows there are NaN values add the sum() method.

Make sure there is no duplicate data, because each record_id is unique or as a primary key. drop_duplicates() will help in eliminating duplicate data, while to remove nan values you can use the dropna() method. The results can be seen that the reduction occurs as much as 1,340,237 rows. Now the data looks clean, after this let’s transform the data so that it is more ready for analysis.

In the membercasual column there are member and casual values, I think it will be easier if the column name is changed to usertype and the value is divided into member and non-member.

Let’s create a new column ride_length the result of reducing the start-end time trip. This will be useful when doing analysis but before that it is necessary to convert the start_at and end_at columns to datetime using pd.to_datetime() method because they were previously in object format. After the ride_length column is created so that it can be calculated I need to create a new column again containing the ride_length conversion result in minutes format.

To find the weekly pattern, use the start_at column by using the dt.day_name() method which will output the name of the day of the week which will be stored in the dayofweek column. The next cell is very optional if you want the ride_length column previously created to only display the format hh:mm:ss you can follow the code above.

I want to make sure that the result of the ride_length calculation has no outliers, but after checking it turns out that there are 72 rows that have minus values. Of course this doesn’t make sense because the return time or end_at is greater than start_at so I want to delete that row. For additional analysis I want to add two columns to explain the details of time such as the hour of each rental departure and the month-year on the date using dt.hour() and dt.to_period(‘M’). Last thing is to save the data cleaning result file to be used for data analysis and answer business questions that have been determined by the marketing director.

Part two will focus on discussing exploratory data analysis, you can find it here.